History of Artificial Intelligence

The quest to create intelligent machines is not solely a modern endeavor. In fact, it’s a tale as old as time itself, woven into ancient myths and stories of automatons and artificial beings brought to life by master craftsmen.

These early imaginings, though fantastical, laid the groundwork for our enduring fascination with imbuing machines with human-like intelligence. This pursuit has manifested in various forms throughout history, from intricate clockwork contraptions to modern-day robots and self-driving cars.

However, the true journey of artificial intelligence (AI) as a scientific discipline began in the mid-20th century, fuelled by a confluence of ground-breaking ideas and rapid advancements in computing technology.

Listen to Podcast

The Genesis of AI: A Spark of Inspiration

While the seeds of AI were sown in earlier centuries, the field officially blossomed in 1956. This pivotal year witnessed the convergence of brilliant minds from diverse disciplines – mathematics, psychology, engineering, economics, and political science – at a workshop held on the campus of Dartmouth College. This gathering, aptly titled the “Dartmouth Summer Research Project on Artificial Intelligence,” was organized by John McCarthy, a visionary computer scientist who first coined the term “artificial intelligence.” The workshop aimed to explore the audacious possibility of creating machines that could simulate aspects of human intelligence, such as using language, forming abstractions, solving problems, and even improving themselves.

This Dartmouth workshop is widely considered the birth of AI as an academic discipline, marking a significant turning point in the quest for intelligent machines. It was here that researchers laid the foundation for many of the core concepts and techniques that would shape the field in the decades to come. One of the key ideas that emerged from this workshop was the Turing Test, proposed by Alan Turing in his landmark 1950 paper “Computing Machinery and Intelligence.” This test, which challenges a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human, has become a cornerstone of AI research and a benchmark for evaluating machine intelligence.

Amidst the flurry of ideas and discussions at Dartmouth, one of the early “aha” moments in AI research came with the development of the Logic Theorist in 1956. This groundbreaking program, created by Allen Newell and Herbert A. Simon, is often cited as the first true AI program. The Logic Theorist was designed to prove mathematical theorems in the field of symbolic logic, mimicking the reasoning processes of a human mathematician. Its ability to successfully prove theorems, such as those in Whitehead and Russell’s Principia Mathematica, sparked excitement and optimism about the potential of AI to replicate and even surpass human cognitive abilities.

Another significant milestone in the early days of AI was the creation of ELIZA in the 1960s by Joseph Weizenbaum. ELIZA was a computer program that could simulate a conversation with a human, using natural language processing to understand and respond to user input. While ELIZA’s capabilities were limited, relying on pattern matching and simple rules to generate responses, it provided an early glimpse into the potential of AI to interact with humans in a natural and intuitive way. ELIZA’s ability to engage in seemingly meaningful conversations, even with its rudimentary understanding of language, captured the imagination of many and further fueled the growing interest in AI.

Early AI in Real Life: Hidden in Plain Sight

While these early AI programs demonstrated the potential of machines to exhibit intelligent behavior, many early AI applications were not readily apparent to the average person. These hidden gems of AI were quietly transforming various aspects of our lives, often without us even realizing it.

One such example is the development of game AI. In 1952, Arthur Samuel, a computer scientist at IBM, developed a program that could play checkers. This program, which was remarkable for its ability to learn and improve its performance over time, became the first program to learn a game independently. This seemingly simple program laid the foundation for future advancements in game AI, culminating in milestones like Deep Blue, a chess-playing computer developed by IBM, defeating chess grandmaster Garry Kasparov in a highly publicized match in 1997.

Another example of early AI in action is the development of speech recognition software in the 1990s. This technology, which allows computers to understand and respond to spoken commands, has become ubiquitous in our daily lives, powering virtual assistants like Siri and Alexa, voice search on our smartphones, and dictation software that converts spoken words into text. While we may take these technologies for granted today, they represent significant achievements in AI research and have transformed the way we interact with computers and information.

Beyond these everyday applications, AI was also making its mark in more specialized domains. In 2000, Professor Cynthia Breazeal at MIT developed Kismet, a robot with a face that could express emotions. Kismet, with its expressive eyes, eyebrows, ears, and mouth, was a pioneering example of social robotics and human-robot interaction. Its ability to simulate human emotions, even in a limited way, opened up new possibilities for robots to engage with humans on a more personal and emotional level.

Around the same time, iRobot introduced the Roomba, the first commercially available autonomous vacuum cleaner. This seemingly simple household appliance was a significant step forward in robotics and AI, demonstrating the potential of robots to perform everyday tasks autonomously. The Roomba’s ability to navigate and clean floors without human intervention captured the imagination of many and paved the way for the development of more sophisticated household robots.

AI was also venturing beyond the confines of Earth. In 2003, NASA landed two rovers, Spirit and Opportunity, on Mars. These rovers, equipped with AI-powered navigation systems, were able to explore the Martian surface autonomously, collecting valuable data and sending back stunning images of the Red Planet. This remarkable feat showcased the application of AI in space exploration and its potential to extend human reach beyond our own planet.

Even in the realm of marketing and entertainment, AI was quietly making its presence felt. Recommendation technology, which uses AI algorithms to analyze user preferences and suggest products, services, or content, became increasingly prevalent in the early 2000s. This technology, which powers recommendation engines on platforms like Amazon, Netflix, and Spotify, has transformed the way we discover and consume information and entertainment.

The Driving Force Behind AI: A Quest for Knowledge and Progress

The motivations behind early AI research were as diverse as the researchers themselves. Some were driven by a pure desire to understand the nature of human intelligence and replicate it in machines, pushing the boundaries of what was considered possible.

Others saw AI as a powerful tool to solve complex problems and automate tasks that were previously thought to require human intelligence, freeing up human potential for more creative and meaningful endeavors.

The Dartmouth conference, in particular, highlighted some of the key motivations driving AI research. As stated in the workshop proposal, the goal was to explore “how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” This ambitious vision captured the essence of AI research and its potential to revolutionize various aspects of human life.

Advancements in computing technology also played a crucial role in fuelling the development of AI. As computers became more powerful, with increased processing speed and memory capacity, researchers could develop more sophisticated AI algorithms and models. This led to breakthroughs in areas such as machine learning, natural language processing, and computer vision, enabling AI systems to perform increasingly complex tasks and learn from data in ways that were previously unimaginable.

The Evolution of AI: From Simple Programs to Complex Systems

The evolution of AI has been a fascinating journey, marked by periods of rapid progress and periods of stagnation, often referred to as “AI winters.”

These winters, characterized by decreased funding and waning enthusiasm, were often triggered by unmet expectations and the limitations of early AI systems. However, despite these challenges, AI has continued to advance, driven by new ideas, technological breakthroughs, and a growing understanding of the complexities of intelligence.

Early AI systems were primarily rule-based, relying on explicit instructions and predefined knowledge to perform tasks. These systems excelled in well-defined domains with clear rules, such as playing chess or proving mathematical theorems. However, they struggled to handle the complexities and uncertainties of the real world.

As the field matured, researchers began to explore new approaches, such as machine learning, which allows AI systems to learn from data and improve their performance over time. This shift from rule-based AI to data-driven AI marked a significant turning point in the evolution of AI. One of the early examples of machine learning was the Perceptron algorithm, developed by Frank Rosenblatt in the late 1950s. This algorithm, which was able to recognize images and learn the difference between geometric shapes, laid the groundwork for later developments in deep learning and neural networks.

The availability of large datasets, coupled with advancements in computing power, further accelerated the progress of machine learning. AI systems could now be trained on massive amounts of data, enabling them to learn complex patterns and make more accurate predictions. This led to breakthroughs in areas such as image recognition, natural language processing, and speech recognition, paving the way for the development of AI applications that could understand and interact with the world in more human-like ways.

Schools of Thought in AI: A Tapestry of Ideas

The field of AI is not a monolithic entity but rather a tapestry of diverse schools of thought, each with its own approach to creating intelligent machines. These different perspectives have enriched the field, leading to a wide range of AI applications across various domains.

One of the major schools of thought is symbolism, also known as logicism or good old-fashioned AI (GOFAI). This approach focuses on using symbols and logical rules to represent knowledge and reason about the world. Symbolic AI systems excel in tasks that require logical reasoning and symbolic manipulation, such as playing chess or proving mathematical theorems. However, they often struggle to handle the complexities and uncertainties of the real world, where knowledge is often incomplete or ambiguous.

Another prominent school of thought is connectionism, which emphasizes the use of artificial neural networks to simulate the structure and function of the human brain. Connectionist AI systems, inspired by the biological neural networks in our brains, are particularly well-suited for tasks that involve pattern recognition, such as image recognition and natural language processing. These systems learn by adjusting the weights of connections between artificial neurons, allowing them to adapt to new data and improve their performance over time.

Evolutionary computation draws inspiration from biological evolution, using techniques such as genetic algorithms to evolve AI systems over time. In this approach, AI systems are treated as populations of individuals that undergo processes of selection, mutation, and reproduction. The fittest individuals, those that perform best on a given task, are more likely to survive and reproduce, passing on their traits to future generations. This process of evolution can lead to the emergence of AI systems that are well-adapted to their environment and capable of solving complex problems.

Beyond these core schools of thought, there are also various perspectives and motivations within the AI community. Some researchers, often referred to as “euphoric true believers,” are optimistic about the potential of AI to solve major global challenges and even achieve superhuman intelligence. Others, more cautious and pragmatic, focus on the practical applications of AI and its potential to enhance human capabilities. There are also “alarmist activists” who raise concerns about the potential risks and ethical implications of AI, advocating for responsible AI development and regulation.

These diverse perspectives and schools of thought have contributed to the richness and dynamism of AI research, driving innovation and pushing the boundaries of what is possible with intelligent machines.

The Impact of AI on Society: A Transformative Force

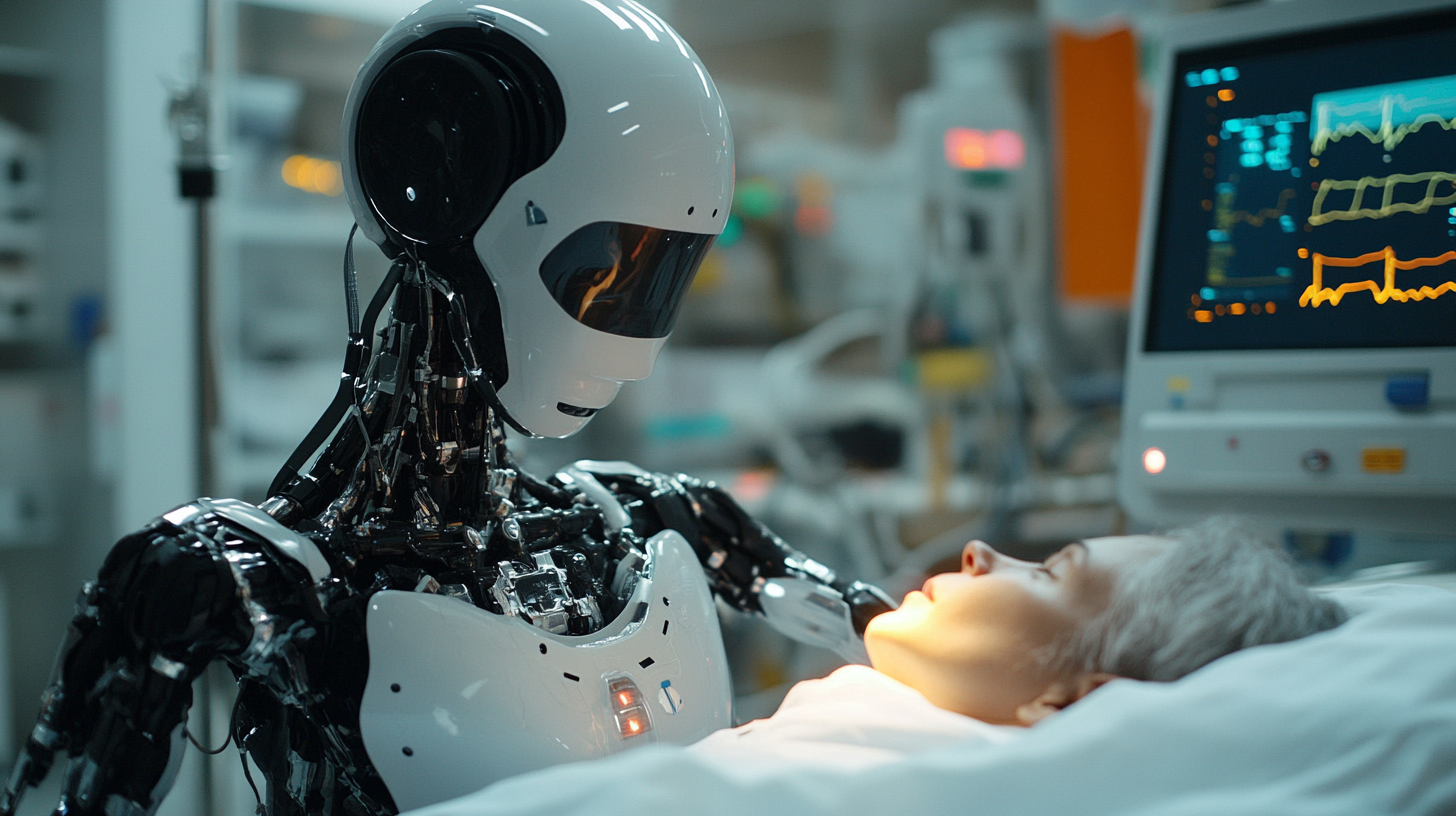

AI is no longer a futuristic concept confined to science fiction; it is a transformative force that is already reshaping our world in profound ways. AI-powered technologies are being used to automate tasks, improve decision-making, and enhance human capabilities in a wide range of fields, from healthcare and finance to transportation and education.

In healthcare, AI is being used to diagnose diseases, develop new treatments, and personalize patient care. AI-powered systems can analyze medical images, such as X-rays and CT scans, to detect abnormalities and assist doctors in making more accurate diagnoses. AI algorithms can also analyze patient data to identify individuals who are at risk of developing certain diseases, allowing for early intervention and preventive care.

In finance, AI is being used to detect fraud, manage risk, and provide personalized financial advice. AI-powered systems can analyze financial transactions to identify suspicious patterns and flag potential fraud. AI algorithms can also assess creditworthiness, predict market trends, and provide tailored investment recommendations to individual investors.

In transportation, AI is powering the development of self-driving cars, which have the potential to revolutionize the way we travel. Self-driving cars, equipped with AI-powered sensors and navigation systems, can navigate roads, avoid obstacles, and make driving safer and more efficient. AI is also being used to optimize traffic flow, reduce congestion, and improve the overall efficiency of transportation systems.

In education, AI is being used to personalize learning, provide individualized feedback, and automate administrative tasks. AI-powered tutoring systems can adapt to the learning styles and pace of individual students, providing customized lessons and feedback. AI algorithms can also analyze student data to identify areas where they need extra help, allowing teachers to provide more targeted support.

While AI offers tremendous potential for good, it also presents challenges and risks that need to be carefully considered. One of the major concerns is the potential for job displacement. As AI-powered systems become more capable, they may automate tasks that are currently performed by humans, leading to job losses in certain sectors. This raises important questions about the future of work and the need for retraining and upskilling programs to prepare workers for the changing job market.

Another concern is the potential for bias in AI systems. AI algorithms are trained on data, and if that data reflects existing biases in society, the AI system may perpetuate or even amplify those biases. This can lead to unfair or discriminatory outcomes, particularly in areas such as hiring, lending, and criminal justice. It is crucial to ensure that AI systems are developed and deployed in a way that is fair, unbiased, and equitable.

The increasing use of AI also raises concerns about privacy and security. AI systems often collect and analyze large amounts of personal data, which can be vulnerable to breaches or misuse. It is essential to have strong data privacy and security measures in place to protect sensitive information and prevent unauthorized access.

Despite these challenges, AI also has the potential to enhance human life and improve our world in many ways. AI can be used to address global challenges such as climate change, resource conservation, and disease prevention. AI-powered systems can analyze climate data to model scenarios and predict the effects of climate change, helping us to develop strategies for mitigation and adaptation. AI can also be used to optimize resource management, reduce waste, and develop more sustainable practices.

In environmental research, AI can analyze satellite imagery to track deforestation, monitor wildlife populations, and assess the health of ecosystems. AI algorithms can also be used to identify patterns and trends in environmental data, helping us to understand the complex interactions between human activities and the natural world.

One inspiring example of AI’s positive impact is its use in the restoration of coral reefs. AI-powered robots can be used to plant coral fragments, monitor reef health, and remove invasive species. This technology can help to accelerate the recovery of damaged reefs and protect these vital ecosystems for future generations.

AI also has the potential to enhance creativity and innovation. AI-driven tools can assist artists, musicians, and writers in generating new ideas, exploring different creative styles, and pushing the boundaries of their respective fields. AI algorithms can analyze vast amounts of creative content to identify patterns and trends, providing insights that can inspire new works of art, music, and literature.

The Future of AI: A Journey of Endless Possibilities

The future of AI holds immense potential, with advancements in areas such as natural language processing, computer vision, and robotics paving the way for new AI applications that could revolutionize industries and solve some of the world’s most pressing challenges.

One of the key trends shaping the future of AI is the development of user-friendly AI platforms. These platforms, similar to today’s website builders, will enable entrepreneurs, educators, and small businesses to develop custom AI solutions without requiring deep technical expertise. This democratization of AI will empower more people to harness the power of AI and create innovative applications across various domains.

However, the widespread adoption of AI also necessitates the development of robust AI regulations and ethical standards. Frameworks such as the EU AI Act are leading the way in establishing guidelines for responsible AI development and deployment. These frameworks classify AI systems into risk tiers and impose stricter requirements on high-risk AI applications, particularly in sensitive areas such as healthcare, finance, and critical infrastructure. AI models, especially generative and large-scale ones, may need to meet transparency, robustness, and cybersecurity standards to ensure their safe and ethical use.

AI is also poised to have a major impact on the future of work. As AI-driven automation becomes more prevalent, it will transform the job market, creating new roles and requiring new skills. While some jobs may be displaced by AI, others will be augmented by AI, allowing humans to focus on tasks that require creativity, critical thinking, and emotional intelligence. It is crucial to invest in education and training programs to prepare the workforce for the changing demands of the AI-powered economy.

Another important area where AI is expected to have a significant impact is predictive analytics. AI-driven predictive analytics can forecast future events and trends, enabling proactive problem-solving and decision-making. This technology can be applied to various domains, from predicting customer behavior and market trends to forecasting natural disasters and preventing disease outbreaks.

Conclusion: A Glimpse into the Ever-Evolving World of AI

The journey of AI has been a remarkable one, marked by ground-breaking discoveries, innovative ideas, and a relentless pursuit of knowledge. From its humble beginnings in the mid-20th century to its current state as a transformative force, AI has come a long way. As we continue to explore the possibilities of intelligent machines, the future of AI promises to be even more exciting and impactful.

The evolution of AI has been characterized by a cyclical pattern of hype and progress followed by periods of disillusionment and reduced funding, often referred to as “AI winters.” These cycles reflect the challenges and complexities of AI research, as well as the evolving expectations and understanding of what AI can achieve. However, despite these setbacks, AI has consistently emerged stronger, driven by new ideas, technological advancements, and a growing appreciation for the potential of intelligent machines.

One of the key takeaways from the history of AI is the shift from rule-based AI to data-driven AI. Early AI systems relied on explicit rules and predefined knowledge to perform tasks, but they often struggled to handle the complexities and uncertainties of the real world. The emergence of machine learning, coupled with the availability of large datasets and increased computing power, has enabled AI systems to learn from data and improve their performance over time. This shift has led to breakthroughs in various domains, from image recognition and natural language processing to robotics and predictive analytics.

As AI becomes more sophisticated and integrated into our lives, it is crucial to address the ethical and societal implications of its widespread adoption. Concerns about job displacement, bias, privacy, and the potential misuse of AI need to be carefully considered. Responsible AI development and regulation are essential to ensure that AI aligns with human values and serves the betterment of society.

The future of AI holds endless possibilities. As AI systems become more capable and user-friendly, they will empower more people to harness the power of AI and create innovative applications across various domains. AI has the potential to revolutionize industries, solve global challenges, and enhance human capabilities in ways that were once unimaginable. However, the future of AI also depends on our ability to develop and deploy AI responsibly, ensuring that it remains a force for good in the world.